Politicians want to classify Julian Assange as a terrorist. Insane? Only at first glance.

I've been reading about something that Assange wrote a few years ago, which basically lays out his plans for Wikileaks. It's actually a pretty neat read. Summary: Assange sees today's American government as some kind of corporate conspiracy (can't argue there), and he wants to throw sand in the works of the conspiracy by increasing the cost of secret communication (without which any conspiracy dies). He intends to do this through random attacks on government secrecy, with the goal of forcing an expensive overreaction, which will end with governments being less secretive.

My first reaction: This dovetails perfectly with a blog post that I've been meaning to write (but will probably never get around to) about the tradeoff between trust and robustness in a networked system. It's actually a really cool tradeoff - trusting another entity in a decentralized system can be viewed as a dodgy optimization, which will usually work but occasionally crashes dramatically. (Bonus: the tradeoff even has a mathematical basis, in the FLP result!) Julian Assange is giving us a real-world demonstration of this principle, by poking at the relatively cosy relationships between governments and forcing them to shift into a less useful but more secure configuration.

My second reaction: You know how the .gov has been making a lot of noise about info-terrorists, even though they have no idea what that even means? DDoS kiddies are usually held up as an example of what to watch out for, but that stuff is so trivial that I'm surprised we waste our time talking about it. Julian Assange, on the other hand, is the real deal, and he's not even terribly sophisticated. He is using the power of the Internet, and the power of the (relatively) unrestricted flow of information, to do something radical to the state.

My third reaction: Oh, man. The government doesn't know how bad this could have been. If Wikileaks had wanted to publish this stuff anonymously, it wouldn't have been terribly difficult for them to do so. The technology already exists, and has for years; it's just a matter of using it effectively. They don't like their diplomatic cables being made public as it is; imagine how much it would suck for them to have a few thousand cables appearing every month, and to be completely unable to track where they were coming from. You know how I said that Assange wasn't terribly sophisticated? If he were, he'd be doing exactly what he's doing now - we'd just have no idea who he was.

Last reaction: I can't help but worry that Julian Assange is gearing up for a dramatic exit from this world. He is simultaneously making himself extremely visible, and making a lot of very powerful enemies. Wikileaks has already published an insurance file; that's not the sort of thing you do unless you expect to have a reason to use it. If Assange does end up assassinated, that may be all the proof we need that something like Wikileaks is desperately needed in today's world.

Tuesday, November 30, 2010

Monday, November 29, 2010

Rewind

So I read the Void Trilogy by Peter Hamilton a few weeks ago, and one of the subplots went like this: in a world of psychics, one young man has exceptionally powerful abilities. Throughout the books, he learns of increasingly incredible things he can do, until he realizes that he can turn back time itself. Specifically, he can think about any moment in his past that he can remember clearly, and rewind the universe back to that moment (but with all his memories intact). This is where things get a little bit nuts.

For the rest of the book, he tries to make everything right with the world, because he's that sort of character. It takes a terrible toll on his mind at times, but in the end, he lives a life such that there's nothing he wants to go back and fix, and he has reached fulfillment. Happy ending, right? And then he goes and, on his deathbed, gives the secret of turning back time to everybody else in his city - and this is where my brain implodes in dismay.

If zero people know how to turn back time, then things make sense, and history proceeds in a boring linear fashion. If one person knows how to turn back time, then things are still simple enough to wrap your head around, because you can trace a single thread of narrative throughout whatever they do - by designating them the "main character" in the story, the story makes sense. But if two or more people know the secret, then things get Terribly Complicated.

Here's one trivial example of how screwed up the universe would become: imagine a game of Rock-Paper-Scissors between two especially competitive people that know how to turn back time (Rewinders?). The entire universe would be locked in a loop until one of them got bored.

There are weird issues surrounding seniority. If two Rewinders are going back and forth on something, the winner is going to be the one that can go the farthest back - back to before the other one existed, perhaps. If we follow this train of thought, then the winner in any conflict is going to be whoever is the oldest.

On the flip side, there are weird edge cases around death. If I sneak up on someone and kill them before they can react, then that's it for them, I've won, no second chances. This is the only way I can see to break out of a loop without first going through the infinite regression tango, and giving the victory to the older person. A world full of Rewinders would have a lot of immortals trying to kill each other, really - sort of like The Highlander but with more mindfuck.

I'm not really going anywhere with this post. Honestly, I just thought it'd be fun to actually think through some of the consequences of a world with Rewinders. :D

For the rest of the book, he tries to make everything right with the world, because he's that sort of character. It takes a terrible toll on his mind at times, but in the end, he lives a life such that there's nothing he wants to go back and fix, and he has reached fulfillment. Happy ending, right? And then he goes and, on his deathbed, gives the secret of turning back time to everybody else in his city - and this is where my brain implodes in dismay.

If zero people know how to turn back time, then things make sense, and history proceeds in a boring linear fashion. If one person knows how to turn back time, then things are still simple enough to wrap your head around, because you can trace a single thread of narrative throughout whatever they do - by designating them the "main character" in the story, the story makes sense. But if two or more people know the secret, then things get Terribly Complicated.

Here's one trivial example of how screwed up the universe would become: imagine a game of Rock-Paper-Scissors between two especially competitive people that know how to turn back time (Rewinders?). The entire universe would be locked in a loop until one of them got bored.

There are weird issues surrounding seniority. If two Rewinders are going back and forth on something, the winner is going to be the one that can go the farthest back - back to before the other one existed, perhaps. If we follow this train of thought, then the winner in any conflict is going to be whoever is the oldest.

On the flip side, there are weird edge cases around death. If I sneak up on someone and kill them before they can react, then that's it for them, I've won, no second chances. This is the only way I can see to break out of a loop without first going through the infinite regression tango, and giving the victory to the older person. A world full of Rewinders would have a lot of immortals trying to kill each other, really - sort of like The Highlander but with more mindfuck.

I'm not really going anywhere with this post. Honestly, I just thought it'd be fun to actually think through some of the consequences of a world with Rewinders. :D

Sunday, November 28, 2010

Diaspora!

So at the beginning of this summer, a group of NYU CS students started on a project to build a decentralized social network, and then made waves when they raised over $200,000 on kickstarter, a crowdsourced funding website. They then proceeded to disappear into a cave for the entire summer, which killed the buzz around Diaspora pretty effectively. Then they put the project up on Github, and people immediately jumped all over them for security flaws. (Personally, I would expect to find security holes about that magnitude for a project this young. You fix them, and you move on.)

If I had to give my opinion of the project, it's somewhere around "cautious optimism". I'm not a Ruby or a Rails fan, but there are worse languages/frameworks they could have used. I think they're striking a reasonable balance between developing in secret and developing in public. On the one hand, they promised to make everything 100% open source, but on the other hand, the open source development model is pathologically incapable of making design decisions, and for the initial stage of a project you're making nothing but. I definitely like that they're piggybacking on existing protocols.

Apparently, they were inspired by Eben Moglen's idea of a "freedom box", which makes me sort of nervous, actually. Nervous, because the idea is good in principle, but completely unworkable and sort of silly in practice. Yes, it would be useful if we all had physical control of our own social media profile, but this has tremendous implications for the reliability of the network as a whole - if my Internet connection goes down, to what extent do I disappear from the web? And, of course, I'm glossing over all the real difficulties with hosting a website on a residential Internet connection. Quite simply, our infrastructure isn't up to the job, and I don't expect that to ever change. So, I kind of hope that the Diaspora devs aren't going to waste too much time on this particular use case.

There are also a ton of fundamentally hard problems that they are going to run into, and while I remain optimistic that they're thinking about them, we won't really know how they handle them until the software is in a more complete state. For example: how do you handle security updates in a worldwide distributed system? There are already a ton of insecure Diaspora instances running around in the wild, that people brought up as soon as the code landed on Github, and the problem is going to get worse unless they do something about it.

Overall, I have high hopes for Diaspora, but it's simply too early to make a call about the project. I'm expecting it to advance rapidly, though, and we may be looking at a 1.0 release within a year. Whether or not it's a "Facebook-killer", like people want it to be, it has a lot of potential to be a useful tool.

If I had to give my opinion of the project, it's somewhere around "cautious optimism". I'm not a Ruby or a Rails fan, but there are worse languages/frameworks they could have used. I think they're striking a reasonable balance between developing in secret and developing in public. On the one hand, they promised to make everything 100% open source, but on the other hand, the open source development model is pathologically incapable of making design decisions, and for the initial stage of a project you're making nothing but. I definitely like that they're piggybacking on existing protocols.

Apparently, they were inspired by Eben Moglen's idea of a "freedom box", which makes me sort of nervous, actually. Nervous, because the idea is good in principle, but completely unworkable and sort of silly in practice. Yes, it would be useful if we all had physical control of our own social media profile, but this has tremendous implications for the reliability of the network as a whole - if my Internet connection goes down, to what extent do I disappear from the web? And, of course, I'm glossing over all the real difficulties with hosting a website on a residential Internet connection. Quite simply, our infrastructure isn't up to the job, and I don't expect that to ever change. So, I kind of hope that the Diaspora devs aren't going to waste too much time on this particular use case.

There are also a ton of fundamentally hard problems that they are going to run into, and while I remain optimistic that they're thinking about them, we won't really know how they handle them until the software is in a more complete state. For example: how do you handle security updates in a worldwide distributed system? There are already a ton of insecure Diaspora instances running around in the wild, that people brought up as soon as the code landed on Github, and the problem is going to get worse unless they do something about it.

Overall, I have high hopes for Diaspora, but it's simply too early to make a call about the project. I'm expecting it to advance rapidly, though, and we may be looking at a 1.0 release within a year. Whether or not it's a "Facebook-killer", like people want it to be, it has a lot of potential to be a useful tool.

Saturday, November 27, 2010

Wallet

I seem to have lost my wallet! It is fucking with my head like you wouldn't believe.

I have looked everywhere. I have looked everywhere at least twice. I've crawled on the ground looking underneath maybe half the furniture in this house. I've torn my room apart - I don't think there's a square inch in there that I haven't looked at today, except for maybe spots that you have to disassemble furniture to get to. I have taken all the cushions off of all the couches, I think. I've called the last place I saw the wallet, and since I came home straightaway after that and haven't really gone anywhere since, there are no leads there. I am this close to trying to figure out a tactful way to call up everybody that was here for Thanksgiving and asking if they walked off with my wallet. Like I said, fucking with my head.

Why am I freaking out so much? This is my first time losing my wallet, and I suppose my first time finding out just how much of a pain it is. If I can't find it before my flight on Sunday, I'm going to have to cancel my credit card and debit card, replace my insurance card, replace my Social Security card, replace my ORCA card (free bus rides, one of many Microsoft perks) so I can ride the bus to work. (By a sheer stroke of luck, I still have my driver's license; don't even ask. XD)

And then, I have to figure out how to keep this from ever happening again. Because, see, I can't just leave a problem like this alone, and deal with it when it comes up. I'm a pathological overthinker. My reaction when a hard drive fails is to create increasingly elaborate system of redundant storage, culminating in what I built earlier this month. My reaction to almost losing my cell phone is to keep multiple backups of all the data on it, just in case. Now that I've lost my wallet once, I'm not sure that I'll be able to ignore the possibility of it happening again - my brain just doesn't work that way. I don't know how I'll solve the problem of randomly losing things that I need to carry around everywhere I go, but I know that I'll be kind of agitated and jittery until I do - it's just how I'm wired.

My mom, bless her heart, tried to help - not by helping me look, but by trying to make me feel better about losing my wallet. Frankly, it just made things worse. She asked me to imagine the worst-case scenario; thanks for the completely generic advice! I definitely feel inclined to sit and listen to you, when I know that you're taking this far less seriously than I am! Plus, I know on an almost subconscious level that she's just trying to make me feel better, and that's not what I want. I don't want to feel better about it, and I don't want to sit down and think about "how bad could it really be". I want to find my goddamn wallet. If all your help means to me is that I have to act calmer while I'm searching frantically, then please, just stop trying.

Anyway, I haven't given up yet. I still have another day to try and figure out where it went. I am convinced that it's still in this house somewhere, and that's the most incredibly frustrating thing - it's so close, but I may not have enough time to find it! Still, I've got until my flight on Sunday. Once I get on the plane, then I'll give up, and start figuring out what all I need to replace. Until then, there's still hope.

I have looked everywhere. I have looked everywhere at least twice. I've crawled on the ground looking underneath maybe half the furniture in this house. I've torn my room apart - I don't think there's a square inch in there that I haven't looked at today, except for maybe spots that you have to disassemble furniture to get to. I have taken all the cushions off of all the couches, I think. I've called the last place I saw the wallet, and since I came home straightaway after that and haven't really gone anywhere since, there are no leads there. I am this close to trying to figure out a tactful way to call up everybody that was here for Thanksgiving and asking if they walked off with my wallet. Like I said, fucking with my head.

Why am I freaking out so much? This is my first time losing my wallet, and I suppose my first time finding out just how much of a pain it is. If I can't find it before my flight on Sunday, I'm going to have to cancel my credit card and debit card, replace my insurance card, replace my Social Security card, replace my ORCA card (free bus rides, one of many Microsoft perks) so I can ride the bus to work. (By a sheer stroke of luck, I still have my driver's license; don't even ask. XD)

And then, I have to figure out how to keep this from ever happening again. Because, see, I can't just leave a problem like this alone, and deal with it when it comes up. I'm a pathological overthinker. My reaction when a hard drive fails is to create increasingly elaborate system of redundant storage, culminating in what I built earlier this month. My reaction to almost losing my cell phone is to keep multiple backups of all the data on it, just in case. Now that I've lost my wallet once, I'm not sure that I'll be able to ignore the possibility of it happening again - my brain just doesn't work that way. I don't know how I'll solve the problem of randomly losing things that I need to carry around everywhere I go, but I know that I'll be kind of agitated and jittery until I do - it's just how I'm wired.

My mom, bless her heart, tried to help - not by helping me look, but by trying to make me feel better about losing my wallet. Frankly, it just made things worse. She asked me to imagine the worst-case scenario; thanks for the completely generic advice! I definitely feel inclined to sit and listen to you, when I know that you're taking this far less seriously than I am! Plus, I know on an almost subconscious level that she's just trying to make me feel better, and that's not what I want. I don't want to feel better about it, and I don't want to sit down and think about "how bad could it really be". I want to find my goddamn wallet. If all your help means to me is that I have to act calmer while I'm searching frantically, then please, just stop trying.

Anyway, I haven't given up yet. I still have another day to try and figure out where it went. I am convinced that it's still in this house somewhere, and that's the most incredibly frustrating thing - it's so close, but I may not have enough time to find it! Still, I've got until my flight on Sunday. Once I get on the plane, then I'll give up, and start figuring out what all I need to replace. Until then, there's still hope.

Friday, November 26, 2010

The Science of Code

Found this blog post today via reddit. It has a really cool insight: The way people work with code is evolving into the same patterns that exist in the sciences today. At the one end you have the "physicists" - people that work with code on the lowest levels (either machine code, or algorithms, depending on your interpretation), and that can expect mathematical certainty. At the other end, you have code "biologists", that mostly work with whole organisms/programs, which are messy things, but which mostly work in mostly predictable ways.

There are a few neat consequences that you can pull out of the analogy. First, while wizards slinging machine code and novices putting scripts together are both "programmers", we probably need new designations for them, in the same way that you can't always lump physicists, chemists, and biologists together as scientists. Second, even though scripting is perceived as easier than low-level programming today, that could be because of the relative immaturity of the field, and not because it's inherently easier. See this comic, for example: physicists can look down on biologists, but biology is hard! Physics can be seen as the ultimate reductionism, and other sciences are simpler in terms of the physics they use, but harder precisely because they can't afford to reduce everything to that degree.

Higher-level programming languages, then, aren't just about simplification - they're also about specialization. (Maybe this is why domain specific languages (DSLs) are a big deal today? By creating a new language, you're jumping ahead of the existing languages in terms of specialization, which is akin to opening up a new field of study in our analogy.) By leaving some of the complexity of the lower levels behind, you're able to create new abstractions and concepts, which are interesting in and of themselves.

I think the analogy actually outstrips modern programming practices by a bit. If you want to write "organic" code, for instance, you need a specialized language like Erlang, since as far as I know it's the only language designed to handle failures of different parts of the program, and keep on running smoothly. Current languages mostly have the assumption that any fault is reason to terminate the program, because the whole thing should be 100% correct. From a physicists perspective, this is fine - if it's not 100% correct, you can't count on it doing anything right! I'm coming around to the "sloppy code" view the more I think about it, though.

The assumption that all code should be 100% correct is unreasonable in this day and age. It pains me to say it, because it goes against everything I've been taught (and quite a bit of what I've said in the past). All code is going to be a bit sloppy, simply because it's written by humans, and not by the faultless code-writing machines that those humans fancy themselves to be. What we need in the next generation of languages is more robust mechanisms for handling incorrect code; if we don't do that, we're not really designing languages to be used by human beings.

There are a few neat consequences that you can pull out of the analogy. First, while wizards slinging machine code and novices putting scripts together are both "programmers", we probably need new designations for them, in the same way that you can't always lump physicists, chemists, and biologists together as scientists. Second, even though scripting is perceived as easier than low-level programming today, that could be because of the relative immaturity of the field, and not because it's inherently easier. See this comic, for example: physicists can look down on biologists, but biology is hard! Physics can be seen as the ultimate reductionism, and other sciences are simpler in terms of the physics they use, but harder precisely because they can't afford to reduce everything to that degree.

Higher-level programming languages, then, aren't just about simplification - they're also about specialization. (Maybe this is why domain specific languages (DSLs) are a big deal today? By creating a new language, you're jumping ahead of the existing languages in terms of specialization, which is akin to opening up a new field of study in our analogy.) By leaving some of the complexity of the lower levels behind, you're able to create new abstractions and concepts, which are interesting in and of themselves.

I think the analogy actually outstrips modern programming practices by a bit. If you want to write "organic" code, for instance, you need a specialized language like Erlang, since as far as I know it's the only language designed to handle failures of different parts of the program, and keep on running smoothly. Current languages mostly have the assumption that any fault is reason to terminate the program, because the whole thing should be 100% correct. From a physicists perspective, this is fine - if it's not 100% correct, you can't count on it doing anything right! I'm coming around to the "sloppy code" view the more I think about it, though.

The assumption that all code should be 100% correct is unreasonable in this day and age. It pains me to say it, because it goes against everything I've been taught (and quite a bit of what I've said in the past). All code is going to be a bit sloppy, simply because it's written by humans, and not by the faultless code-writing machines that those humans fancy themselves to be. What we need in the next generation of languages is more robust mechanisms for handling incorrect code; if we don't do that, we're not really designing languages to be used by human beings.

Thursday, November 25, 2010

Layers of Fail

So here's an annoying multi-layered fail, notable because it affects three adjacent layers of the network stack! As any programmer will tell you, the most interesting bugs to diagnose are the ones that result from the interaction of other bugs. This particular one results in me losing messages on AIM.

First fail: U-Verse and my Mac

I don't know if anybody else has seen this problem, but my Macbook Pro cannot keep up a reliable connection to any U-Verse modem. I've seen this problem with multiple U-Verse modems, and only my Mac, so the possibilities are (in order of likeliness):

This is pretty annoying, because WiFi is supposed to be standardized! All implementations are supposed to be interoperable with all others. Either AT&T or Apple could have caught this (isn't it standard practice to test with other widely-used hardware?), so the fault could lie with either company. Luckily, I don't have U-Verse at home, so I don't have the need to diagnose this properly - it's only an issue when I'm visiting people that do, like my parents.

Second fail: Automatically dropping connections on interface down

This is such a widespread thing that I think it must be intentional, but I can't figure out any reason that it's not a terrible idea. On any OS that I've used, when a network interface goes down, all connections are severed automatically. The thing is, the IP protocol is explicitly designed to allow lost packets, and the TCP protocol on top of it is designed to handle it, so the dropping of connections is unnecessary. If operating systems just ignored the loss of the interface on the assumption that it'll come back up soon, everything will still work as designed, and a lot of situations involving intermittent connections will work much better!

In other words, effort went in to a feature which makes things worse, which definitely counts as a failure in my book.

Third fail: AIM protocol doesn't handle dropped connections cleanly

This one's pretty simple: if a connection drops, and a message is sent during the timeout before the server decides that the connection is dead, that message seems to be lost. Seems like a simple bug to fix on the server, but it's been going on for a while now, so apparently that's not going to happen. What's more irritating about this one is, AIM already seems to save messages that are sent to somebody that's offline - it just can't detect that you're offline during the timeout period.

The end result of these three (or two, if you don't want to count the middle one) bugs is that AIM is nearly unusable for me when I'm using the WiFi at my parents' house. (Yet another reason to switch to GTalk? :D No idea if it has the same problem, though.)

First fail: U-Verse and my Mac

I don't know if anybody else has seen this problem, but my Macbook Pro cannot keep up a reliable connection to any U-Verse modem. I've seen this problem with multiple U-Verse modems, and only my Mac, so the possibilities are (in order of likeliness):

- Buggy AT&T software in the modem

- Buggy firmware for my wireless card

- Random hardware fault in my Mac (unlikely, because it only happens with U-Verse modems)

This is pretty annoying, because WiFi is supposed to be standardized! All implementations are supposed to be interoperable with all others. Either AT&T or Apple could have caught this (isn't it standard practice to test with other widely-used hardware?), so the fault could lie with either company. Luckily, I don't have U-Verse at home, so I don't have the need to diagnose this properly - it's only an issue when I'm visiting people that do, like my parents.

Second fail: Automatically dropping connections on interface down

This is such a widespread thing that I think it must be intentional, but I can't figure out any reason that it's not a terrible idea. On any OS that I've used, when a network interface goes down, all connections are severed automatically. The thing is, the IP protocol is explicitly designed to allow lost packets, and the TCP protocol on top of it is designed to handle it, so the dropping of connections is unnecessary. If operating systems just ignored the loss of the interface on the assumption that it'll come back up soon, everything will still work as designed, and a lot of situations involving intermittent connections will work much better!

In other words, effort went in to a feature which makes things worse, which definitely counts as a failure in my book.

Third fail: AIM protocol doesn't handle dropped connections cleanly

This one's pretty simple: if a connection drops, and a message is sent during the timeout before the server decides that the connection is dead, that message seems to be lost. Seems like a simple bug to fix on the server, but it's been going on for a while now, so apparently that's not going to happen. What's more irritating about this one is, AIM already seems to save messages that are sent to somebody that's offline - it just can't detect that you're offline during the timeout period.

The end result of these three (or two, if you don't want to count the middle one) bugs is that AIM is nearly unusable for me when I'm using the WiFi at my parents' house. (Yet another reason to switch to GTalk? :D No idea if it has the same problem, though.)

Wednesday, November 24, 2010

"Content" and "Consumers"

So am I the only person that gets annoyed when people talk about "content"?

The phrase is aggressively bland and intolerably reductive. "Content" can be anything. "Content" is a book, an article, a song, a cool video, a funny joke, a clever program, a picture worth a thousand words - all glommed together into a single nondescript concept. Nobody who has created something worth creating, something that they're proud of, will refer to it as "content". "Content" is anonymous. "Content" is undifferentiated. "Content" is everything, and nothing in particular. "Content" is what you call it when you don't care what it is.

"Content" is what you feed to "consumers" - nothing more.

And there's another word that bugs the hell out of me: "consumers". A "consumer" isn't a person - it's a thing, a mindless black hole, which consumes whatever you put in front of it. People are complex and individual, but we know how to market to "consumers". A "consumer" isn't something you would ever have a conversation with. A "consumer" never produces anything of value. "Consumers" are all the same, and when they're not, they're amenable to market segmentation. "Consumers" exist because they're easier to deal with than people.

I think that the mindset embodied by these two words is a sickness. When you see the world in terms of "content", and "consumers" that want it, you're hiding away all the incredible richness of the world. "Content" is culture, viewed from the outside, with the intent to put it in boxes and sell it to people - sorry, "consumers" - and it honestly freaks me out a little that there are people out there that are willing to think in those terms.

So please. Can we all stop using these words? I think we'll all be happier in the long run.

The phrase is aggressively bland and intolerably reductive. "Content" can be anything. "Content" is a book, an article, a song, a cool video, a funny joke, a clever program, a picture worth a thousand words - all glommed together into a single nondescript concept. Nobody who has created something worth creating, something that they're proud of, will refer to it as "content". "Content" is anonymous. "Content" is undifferentiated. "Content" is everything, and nothing in particular. "Content" is what you call it when you don't care what it is.

"Content" is what you feed to "consumers" - nothing more.

And there's another word that bugs the hell out of me: "consumers". A "consumer" isn't a person - it's a thing, a mindless black hole, which consumes whatever you put in front of it. People are complex and individual, but we know how to market to "consumers". A "consumer" isn't something you would ever have a conversation with. A "consumer" never produces anything of value. "Consumers" are all the same, and when they're not, they're amenable to market segmentation. "Consumers" exist because they're easier to deal with than people.

I think that the mindset embodied by these two words is a sickness. When you see the world in terms of "content", and "consumers" that want it, you're hiding away all the incredible richness of the world. "Content" is culture, viewed from the outside, with the intent to put it in boxes and sell it to people - sorry, "consumers" - and it honestly freaks me out a little that there are people out there that are willing to think in those terms.

So please. Can we all stop using these words? I think we'll all be happier in the long run.

Tuesday, November 23, 2010

The Shallows

So like I mentioned yesterday, I've just finished reading The Shallows, by Nicholas Carr. It's about the effect that the Internet is having on our thought processes, on a fundamental level, and it's a pretty amazing book.

We see the Internet as an incredibly powerful tool for organizing and retrieving information, and we're right - as a multiplier for our own abilities, it's an unprecedented achievement. What some people are realizing, however, is that it is not without its downsides. Ask yourself this: how many books do you read for fun these days, and how many did you read before you discovered the Internet? Carr contends that this is not just because our reading has shifted online; instead, our brains have been rewired by the constant cheap stimulus of the web, so that we find it more difficult to read extended prose than we used to.

Historical precedent

Believe it or not, this isn't the first time that a new technology has fundamentally changed how we think. Carr looks all the way back to the invention of written language, which represented the beginning of the shift from an oral culture to a written one. There's some interesting stuff here that I didn't know about. For example, written languages were originally written using only letters, with no punctuation, or even spacing between words. See, written language was originally just a transcription of what people said out loud, and only intended to be read out loud. It wasn't until hundreds of years later that these things were added to language, to make reading easier.

The revolution brought on by written language reached a fever pitch with Gutenberg's invention in the mid-1600s. All of a sudden, books (previously reserved for the wealthy) were cheap enough that everybody could have them. Simultaneously, the automation of printing meant that the cost of introducing a new book decreased dramatically, so that authors were free to experiment with radical new styles of writing. (Critics of the time decried the new styles, a product of new technology, as a massive dumbing down of literature - sound familiar?)

The introduction of reading to the population as a whole caused a fundamental change in the way we thought. Carr starts the book by just asserting this, but when he really gets into the meat of his argument, he backs it up with a lot of neurological evidence - mostly the dramatic changes that occur in our brains when we learn to read, as seen through an MRI.

Shallows

So how is the Internet changing things? Carr cites study after study that all point in the same direction - the Internet diminishes our ability to read deeply, by encouraging skimming and by allowing us to skip around to other pages easily. Furthermore, it does so on a fundamental neurological level. This is the core argument of the book, and Carr backs it up well, going all the way down to what we know about the mechanisms of human memory.

There are lots of counterarguments to be made here, of course. Even if the Internet makes it harder for us to absorb a book of information, doesn't it make up for it by giving us easy access to anything we might want to know? Doesn't the fact that looking up information is now instantaneous make it more efficient for us to know a wide variety of subjects shallowly, rather than learning a few subjects more deeply? (Personally, I'd say that it depends - some questions are harder to answer than others using a tool like Google, and it's an effect that's difficult to take into account when deciding what to learn.)

What should we do about this? It's hard to say. Really, it's hard to even say what we could do - people aren't even convinced that this is a problem today, and that would be a necessary prerequisite before we could even talk about steering the tech industry based on the effects that technology would have on us.

The technocrat in me thinks that we should just let technology happen, and deal with the consequences after the fact. Technology can solve technology's problems, and everything will work itself out, right? I used to believe that, anyway, but now I'm not so sure. (Maybe I don't have as much faith in humanity as I used to?)

In any case, though, everybody should read this book! Even if you don't agree with this author's premise, you'll probably find it an interesting read.

We see the Internet as an incredibly powerful tool for organizing and retrieving information, and we're right - as a multiplier for our own abilities, it's an unprecedented achievement. What some people are realizing, however, is that it is not without its downsides. Ask yourself this: how many books do you read for fun these days, and how many did you read before you discovered the Internet? Carr contends that this is not just because our reading has shifted online; instead, our brains have been rewired by the constant cheap stimulus of the web, so that we find it more difficult to read extended prose than we used to.

Historical precedent

Believe it or not, this isn't the first time that a new technology has fundamentally changed how we think. Carr looks all the way back to the invention of written language, which represented the beginning of the shift from an oral culture to a written one. There's some interesting stuff here that I didn't know about. For example, written languages were originally written using only letters, with no punctuation, or even spacing between words. See, written language was originally just a transcription of what people said out loud, and only intended to be read out loud. It wasn't until hundreds of years later that these things were added to language, to make reading easier.

The revolution brought on by written language reached a fever pitch with Gutenberg's invention in the mid-1600s. All of a sudden, books (previously reserved for the wealthy) were cheap enough that everybody could have them. Simultaneously, the automation of printing meant that the cost of introducing a new book decreased dramatically, so that authors were free to experiment with radical new styles of writing. (Critics of the time decried the new styles, a product of new technology, as a massive dumbing down of literature - sound familiar?)

The introduction of reading to the population as a whole caused a fundamental change in the way we thought. Carr starts the book by just asserting this, but when he really gets into the meat of his argument, he backs it up with a lot of neurological evidence - mostly the dramatic changes that occur in our brains when we learn to read, as seen through an MRI.

Shallows

So how is the Internet changing things? Carr cites study after study that all point in the same direction - the Internet diminishes our ability to read deeply, by encouraging skimming and by allowing us to skip around to other pages easily. Furthermore, it does so on a fundamental neurological level. This is the core argument of the book, and Carr backs it up well, going all the way down to what we know about the mechanisms of human memory.

There are lots of counterarguments to be made here, of course. Even if the Internet makes it harder for us to absorb a book of information, doesn't it make up for it by giving us easy access to anything we might want to know? Doesn't the fact that looking up information is now instantaneous make it more efficient for us to know a wide variety of subjects shallowly, rather than learning a few subjects more deeply? (Personally, I'd say that it depends - some questions are harder to answer than others using a tool like Google, and it's an effect that's difficult to take into account when deciding what to learn.)

What should we do about this? It's hard to say. Really, it's hard to even say what we could do - people aren't even convinced that this is a problem today, and that would be a necessary prerequisite before we could even talk about steering the tech industry based on the effects that technology would have on us.

The technocrat in me thinks that we should just let technology happen, and deal with the consequences after the fact. Technology can solve technology's problems, and everything will work itself out, right? I used to believe that, anyway, but now I'm not so sure. (Maybe I don't have as much faith in humanity as I used to?)

In any case, though, everybody should read this book! Even if you don't agree with this author's premise, you'll probably find it an interesting read.

Monday, November 22, 2010

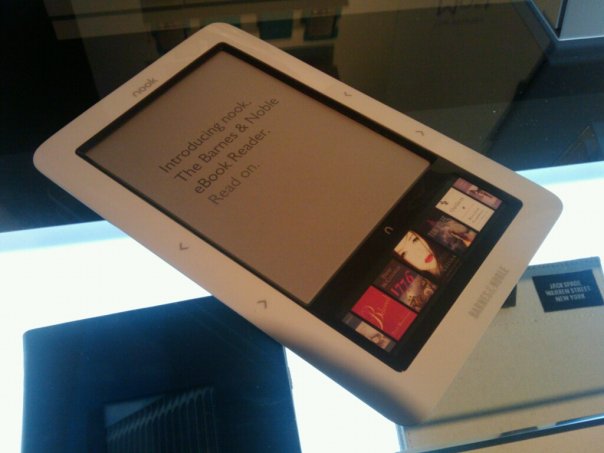

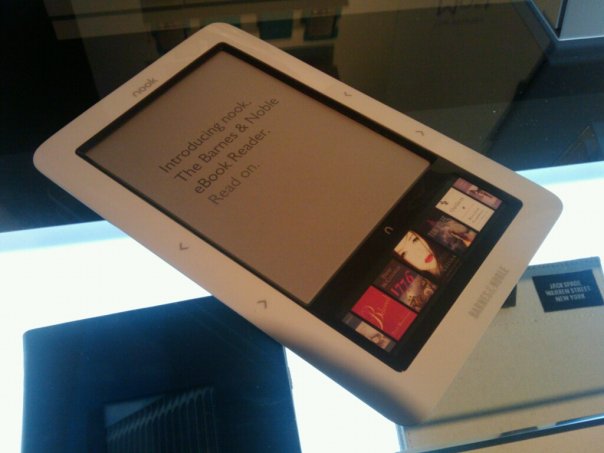

Nook!

I've so far neglected to mention it here, but I got a Nook!

Compared to the Sony Reader that I've had for a few years now, this is a huge improvement. I'll start with the most visible difference - while the Reader has buttons numbered 1-10 plus a few more for navigation, the Nook has a color touchscreen. They use it with the main E-Ink screen fairly effectively, too - the interface is generally divided between them sanely, with stuff being displayed on the big screen, and all the controls on the touchscreen.

The E-Ink screen on the Nook is noticeably better than the one on the Sony Reader. The contrast is much higher, and the resolution seems a bit better too (though that could also be the font, I guess). The Nook is also much smarter about updating the screen; where the Reader would have to refresh the whole screen with an annoying invert-everything action, the Nook can update individual pixels without disturbing the rest of the display.

The other most important difference is the inclusion of a wireless connection, like the Kindle. I'm pretty impressed by how well this feature works - I can browse the bookstore, buy a book, and download it to the device within a span of a few minutes. (Browsing over a cellular internet connection is a bit sluggish, though. If you can, it's much easier to buy the books through the B&N website.) There's also a WiFi-only version, but I think that's not as much fun. WiFi can be hard to find, and it's nice to be able to get new books from a moving vehicle. As far as ebook pricing goes, I don't have any complaints about the B&N store.

Battery life is a different story. While reading, the battery lasts pretty much forever (and by forever, I mean it should last through any one book, at least that I've found), as expected of an E-Ink device. When you put the device to sleep, though, it only lasts for a few days before needing to be charged. This is pretty disappointing, honestly, and I hope they fix it in a future software update.

Today on my flight to Houston, I brought the Nook and finished The Shallows, which is about how technology is changing our reading habits. (Wooo, irony!) I think I'll be talking more about that in tomorrow's post, but as far as the reading experience goes, it was at least as comfortable as reading a real book. Probably more so, in fact, because the Nook is easier to fit in my bag than the paperback (The Prefect, by Alistair Reynolds; somebody lent it to me and now I need to read it!) I brought along as a backup.

Summary: The Nook is kind of awesome, and I need to finish this post within the next minute or I'm not going to finish in time.

Compared to the Sony Reader that I've had for a few years now, this is a huge improvement. I'll start with the most visible difference - while the Reader has buttons numbered 1-10 plus a few more for navigation, the Nook has a color touchscreen. They use it with the main E-Ink screen fairly effectively, too - the interface is generally divided between them sanely, with stuff being displayed on the big screen, and all the controls on the touchscreen.

The E-Ink screen on the Nook is noticeably better than the one on the Sony Reader. The contrast is much higher, and the resolution seems a bit better too (though that could also be the font, I guess). The Nook is also much smarter about updating the screen; where the Reader would have to refresh the whole screen with an annoying invert-everything action, the Nook can update individual pixels without disturbing the rest of the display.

The other most important difference is the inclusion of a wireless connection, like the Kindle. I'm pretty impressed by how well this feature works - I can browse the bookstore, buy a book, and download it to the device within a span of a few minutes. (Browsing over a cellular internet connection is a bit sluggish, though. If you can, it's much easier to buy the books through the B&N website.) There's also a WiFi-only version, but I think that's not as much fun. WiFi can be hard to find, and it's nice to be able to get new books from a moving vehicle. As far as ebook pricing goes, I don't have any complaints about the B&N store.

Battery life is a different story. While reading, the battery lasts pretty much forever (and by forever, I mean it should last through any one book, at least that I've found), as expected of an E-Ink device. When you put the device to sleep, though, it only lasts for a few days before needing to be charged. This is pretty disappointing, honestly, and I hope they fix it in a future software update.

Today on my flight to Houston, I brought the Nook and finished The Shallows, which is about how technology is changing our reading habits. (Wooo, irony!) I think I'll be talking more about that in tomorrow's post, but as far as the reading experience goes, it was at least as comfortable as reading a real book. Probably more so, in fact, because the Nook is easier to fit in my bag than the paperback (The Prefect, by Alistair Reynolds; somebody lent it to me and now I need to read it!) I brought along as a backup.

Summary: The Nook is kind of awesome, and I need to finish this post within the next minute or I'm not going to finish in time.

Sunday, November 21, 2010

The Man-in-the-Middle Defense

I'm not sure why this is, and it's probably a really bad idea, but for some reason as BitTorrent gets harder to use (with all the major trackers being targeted), I become more and more motivated to come up with a p2p protocol that doesn't have BitTorrent's weaknesses. So in that vein, here's something I've been thinking about.

One of BitTorrent's biggest problems is that every user knows about all the other users, and so sending nasty letters to everybody downloading a given file is as simple as grabbing a list of IP addresses and contacting a bunch of ISPs. What we need, then, is a way to anonymize connections, and transfer data between two computers without either of them knowing the identity of the other. There are already a few protocols that get us partway there: using a traditional proxy, you can mask the address of one party, but not both; using something like Tor, you can prevent any one proxy server from knowing both the client and server address, but the client still has to know the server address. So what can we do instead?

Repeaters

The golden rule of system design: When in doubt, go for the simplest thing that could possibly work!

So in this hypothetical protocol, two peers (Alice and Bob, why not) have been communicating pseudonymously. At some point, Alice wants to send Bob a file which is too big for the channel they've been using, so they both agree on an anonymous repeater. At some predetermined time (in most protocols, the time would just be "now"), they both connect to a server, and the server just repeats everything it sees on one connection to the other.

(Once you've got the simplest thing, you may need to fix up a bunch of other problems.)

First, we need a way for a single server to distinguish between a lot of people connecting to it at once - which of them actually want to talk to each other? One solution is to have Alice and Bob agree on a shared secret ahead of time. When they both contact the repeater, they send the shared secret, and the repeater knows to establish a connection between them.

That's weak against eavesdroppers, though, and in today's world of relatively widespread DPI gear, you just can't trust the network. This can be solved with SSL, but only if Alice and Bob can agree on the repeater's public key ahead of time. (If not, then SSL certs are easy enough to fake - it's a little extra effort to do so, but if we were only defending against unmotivated eavesdroppers, then this would be pretty easy!) Solving this problem properly will take a little more thought than I'm willing to put into this problem tonight, unfortunately. (Maybe passing the SSL layer through the proxy?)

How do we keep the repeaters from learning about Alice and Bob's identities? For that, we can use proxies - we only need to keep their identities safe from the repeater, not the other way around, so existing proxy mechanisms will work for this.

How will we handle the performance cost of going through so many layers? Alice and Bob can agree on some protocol ahead of time for dividing the data across several paths, or something like that. I'm explicitly not designing that layer here - proxies and repeaters are general enough mechanisms that more complex protocols can be implemented on top of them pretty easily. (That's one of the biggest advantages of using the simplest thing!)

As a completely unintended bonus, if anonymous repeaters like I've described here become widespread, that could be a solution to the problem of establishing a connection between two computers that are both stuck behind NAT.

Digital Dead Drops

A completely different solution to the original problem would be establishing "dead drops" - locations where you can drop a file for a certain amount of time, and somebody else can pick it up later. (I've already seen pastebin used like this, come to think of it!) If both parties use proxy chains, and the data is encrypted, then this is even more secure than using repeaters because you avoid the simultaneous connection problem - an eavesdropper has a hint about who you're talking to because you're connected (however indirectly) to the other user.

The Next Problem

The other major problem with p2p networks is that search is public - by making a file available for others to download, I'm also announcing to the world that I have that file, and some people might be upset about that.

I have some ideas about how to solve that, but I've probably spent too much time blogging about this topic already. Instead, I think it's time for me to start coding these things up, and see what works. Should be fun!

One of BitTorrent's biggest problems is that every user knows about all the other users, and so sending nasty letters to everybody downloading a given file is as simple as grabbing a list of IP addresses and contacting a bunch of ISPs. What we need, then, is a way to anonymize connections, and transfer data between two computers without either of them knowing the identity of the other. There are already a few protocols that get us partway there: using a traditional proxy, you can mask the address of one party, but not both; using something like Tor, you can prevent any one proxy server from knowing both the client and server address, but the client still has to know the server address. So what can we do instead?

Repeaters

The golden rule of system design: When in doubt, go for the simplest thing that could possibly work!

So in this hypothetical protocol, two peers (Alice and Bob, why not) have been communicating pseudonymously. At some point, Alice wants to send Bob a file which is too big for the channel they've been using, so they both agree on an anonymous repeater. At some predetermined time (in most protocols, the time would just be "now"), they both connect to a server, and the server just repeats everything it sees on one connection to the other.

(Once you've got the simplest thing, you may need to fix up a bunch of other problems.)

First, we need a way for a single server to distinguish between a lot of people connecting to it at once - which of them actually want to talk to each other? One solution is to have Alice and Bob agree on a shared secret ahead of time. When they both contact the repeater, they send the shared secret, and the repeater knows to establish a connection between them.

That's weak against eavesdroppers, though, and in today's world of relatively widespread DPI gear, you just can't trust the network. This can be solved with SSL, but only if Alice and Bob can agree on the repeater's public key ahead of time. (If not, then SSL certs are easy enough to fake - it's a little extra effort to do so, but if we were only defending against unmotivated eavesdroppers, then this would be pretty easy!) Solving this problem properly will take a little more thought than I'm willing to put into this problem tonight, unfortunately. (Maybe passing the SSL layer through the proxy?)

How do we keep the repeaters from learning about Alice and Bob's identities? For that, we can use proxies - we only need to keep their identities safe from the repeater, not the other way around, so existing proxy mechanisms will work for this.

How will we handle the performance cost of going through so many layers? Alice and Bob can agree on some protocol ahead of time for dividing the data across several paths, or something like that. I'm explicitly not designing that layer here - proxies and repeaters are general enough mechanisms that more complex protocols can be implemented on top of them pretty easily. (That's one of the biggest advantages of using the simplest thing!)

As a completely unintended bonus, if anonymous repeaters like I've described here become widespread, that could be a solution to the problem of establishing a connection between two computers that are both stuck behind NAT.

Digital Dead Drops

A completely different solution to the original problem would be establishing "dead drops" - locations where you can drop a file for a certain amount of time, and somebody else can pick it up later. (I've already seen pastebin used like this, come to think of it!) If both parties use proxy chains, and the data is encrypted, then this is even more secure than using repeaters because you avoid the simultaneous connection problem - an eavesdropper has a hint about who you're talking to because you're connected (however indirectly) to the other user.

The Next Problem

The other major problem with p2p networks is that search is public - by making a file available for others to download, I'm also announcing to the world that I have that file, and some people might be upset about that.

I have some ideas about how to solve that, but I've probably spent too much time blogging about this topic already. Instead, I think it's time for me to start coding these things up, and see what works. Should be fun!

Saturday, November 20, 2010

Chaos Theory

The point is, ladies and gentleman, that chaos, for lack of a better word, is good. Chaos is right, chaos works. Chaos clarifies, cuts through, and captures the essence of the evolutionary spirit. Chaos, in all of its forms; chaos in life, in money, in love, knowledge has marked the upward surge of mankind. And chaos, you mark my words, will not only save Android, but that other malfunctioning entity called the US cellular industry.

This completely unnecessary riff on Gordon Gekko was inspired by this article, which asserts that Windows Phone will have an advantage over Android because it's less "chaotic". How horrid! That kind of platform lockdown moves Windows Phone onto the iPhone's turf,

The iPhone model (one OS running on one device, all controlled by one company) works for Apple, but I personally think it's a fluke. By launching the first smartphone platform in the multitouch paradigm, Apple snatched up enough of the market to become entrenched, and by the time competitors showed up, Apple was on the second or third revision of the iPhone, giving them a lead that could take a decade to wear off. I would argue that they succeed today in spite of (and certainly not because of!) their locked-down ecosystem. The iPhone hasn't changed significantly since the first revision, and at the rate things are going right now, it might not be premature to label the iPhone a legacy platform.

Android, on the other hand, is chaos. Google all but throws the software out there, yells "Come and get it!", and lets the carriers and manufacturers do whatever they want with it. It's sometimes inconvenient, but it also leads to some really cool stuff. Can you imagine something like the Nook (which secretly runs Android) being built on top of the iPhone OS?

I sometimes joke about Windows Phone copying the iPhone to an astonishing degree, but it still worries me. The iPhone model is something that will work once, for the first company to come up with a revolutionary new paradigm, but after that a single entity can't keep up with the rest of the industry for very long. Microsoft does seem to be doing a lot of things better than Apple (C# doesn't make me want to kill myself the same way Objective-C does, for instance), so Windows Phone is still a compelling platform, but if Microsoft wants long-term viability it's going to have to look beyond what Apple is succeeding at today.

We must remember that Windows - and, hell, the entire concept of an operating system - came into being to manage chaos, not eliminate it. Back in the Bad Old Days, hardware platforms were fairly standardized, and introducing any changes was likely to break existing software. This is where turbo buttons originally came from, and it's not a model we should be aspiring to. Having a number of different hardware profiles may make Android harder for developers to test their apps on, but it also means that the platform is much more flexible, and by forcing developers to account for different hardware profiles, Google is creating a better platform for the long term.

Any Windows program will (with very few exceptions) run on any Windows computer. Microsoft's past success with that model wasn't a fluke - it was a testament to the fundamental power of a model that allows for a wide variety of future hardware improvements. It's interesting to see how closely the development of mobile phones is mimicking the development of PCs 25 years ago; some of the players (like Apple) are even moving into the same positions. Microsoft has already managed to invent the PC once, and I hope that some people at the company remember enough to be able to invent it again.

In the end, it comes down to how mature you think the smartphone market is. If you think most of the innovation has already happened, then bet on the vertically integrated platform (like the iPhone). If you think most of the innovation has yet to happen, then bet on the open, flexible platform (like Android). It's still early enough in Windows Phone's development for Microsoft to make a choice, and I really hope they choose the latter.

Friday, November 19, 2010

Thursday, November 18, 2010

New phone!

So I've started thinking about getting a new phone!

Windows Phone

On the one hand, there's Windows Phone. I haven't had great experiences with Windows Mobile in the past, but Windows Phone is a totally new platform, and it actually seems pretty nice. I get one for "free", as a Microsoft employee - free with a two-year contract, though, so really it's closer to half price. Everybody else around the office is pretty stoked about them, and the enthusiasm is a bit infectious. The development tools look pretty awesome, too.

On the other hand, it's a totally new and somewhat unproven platform, with a radical new user interface to boot, and hardly any apps written for it yet. There's also the Microsoft factor, as much as I hate to admit it - I just don't have a ton of confidence in Microsoft's ability to execute in this space. (Here's an example: I use Microsoft My Phone to sync my phone to the web right now. It's a really useful service, but Microsoft is killing it in favor of Windows Live, and I have yet to see any official solution for migrating my data to Windows Phone. No other company in the world could get away with this kind of lack of focus.)

Pros: Employee price, easy to code on, supporting my employer, shiny.

Cons: New; unproven; Microsoft

Android

In the other corner, we have Android. It's proven to be a solid competitor in the mobile space, and is usually mentioned in the same breath as iOS these days. It's also Linux-based and relatively hackable (in the good way), and I have to confess to a certain amount of nerdy glee at the thought of being able to ssh in to my cell phone and poke around. >_> The open source factor is also a plus, even if Android isn't going along with the spirit of open source at all.

There are also problems with Android, foremost among them being that manufacturers usually don't release updates to phones that aren't ridiculously popular. The Nexus One gets updates, the Droid family gets updates, and so do other phones of similar notoriety, but most aren't so lucky. I'd also be the only guy at Microsoft without a Windows Phone, which could get awkward.

Pros: Solid platform, open source(-ish), hackable, has momentum

Cons: Carriers have too much control, Google is slightly evil

Bonus: Microsoft's strategy against Android is pretty much the sketchiest thing possible (future blog topic), and actually inclines me to support Google. <_<

iPhone

The thought of carrying millions of lines of Objective-C code in my pocket just makes me shudder. No iPhone for me.

MeeGo

MeeGo is kind of the dark horse in this race. It's a joint thing between Intel and Nokia (and a few others?) to put together a proper open source Linux-based phone OS. (None of this Android-style "code drop" crap.) This would be a really compelling option for me if they'd gotten around to releasing any phones yet, but... well.

There is the Nokia N900, which you can run MeeGo on, but I don't get the impression that it's especially well supported, and the N900 is already a year old. Overall, it seems like MeeGo is at too early a stage in its development to consider, but if Nokia or Intel announced a phone tomorrow that had high-end specs and ran MeeGo natively, well, that'd certainly throw a wrench into my decision.

So. Anybody got opinions? :D

Windows Phone

On the one hand, there's Windows Phone. I haven't had great experiences with Windows Mobile in the past, but Windows Phone is a totally new platform, and it actually seems pretty nice. I get one for "free", as a Microsoft employee - free with a two-year contract, though, so really it's closer to half price. Everybody else around the office is pretty stoked about them, and the enthusiasm is a bit infectious. The development tools look pretty awesome, too.

On the other hand, it's a totally new and somewhat unproven platform, with a radical new user interface to boot, and hardly any apps written for it yet. There's also the Microsoft factor, as much as I hate to admit it - I just don't have a ton of confidence in Microsoft's ability to execute in this space. (Here's an example: I use Microsoft My Phone to sync my phone to the web right now. It's a really useful service, but Microsoft is killing it in favor of Windows Live, and I have yet to see any official solution for migrating my data to Windows Phone. No other company in the world could get away with this kind of lack of focus.)

Pros: Employee price, easy to code on, supporting my employer, shiny.

Cons: New; unproven; Microsoft

Android

In the other corner, we have Android. It's proven to be a solid competitor in the mobile space, and is usually mentioned in the same breath as iOS these days. It's also Linux-based and relatively hackable (in the good way), and I have to confess to a certain amount of nerdy glee at the thought of being able to ssh in to my cell phone and poke around. >_> The open source factor is also a plus, even if Android isn't going along with the spirit of open source at all.

There are also problems with Android, foremost among them being that manufacturers usually don't release updates to phones that aren't ridiculously popular. The Nexus One gets updates, the Droid family gets updates, and so do other phones of similar notoriety, but most aren't so lucky. I'd also be the only guy at Microsoft without a Windows Phone, which could get awkward.

Pros: Solid platform, open source(-ish), hackable, has momentum

Cons: Carriers have too much control, Google is slightly evil

Bonus: Microsoft's strategy against Android is pretty much the sketchiest thing possible (future blog topic), and actually inclines me to support Google. <_<

iPhone

The thought of carrying millions of lines of Objective-C code in my pocket just makes me shudder. No iPhone for me.

MeeGo

MeeGo is kind of the dark horse in this race. It's a joint thing between Intel and Nokia (and a few others?) to put together a proper open source Linux-based phone OS. (None of this Android-style "code drop" crap.) This would be a really compelling option for me if they'd gotten around to releasing any phones yet, but... well.

There is the Nokia N900, which you can run MeeGo on, but I don't get the impression that it's especially well supported, and the N900 is already a year old. Overall, it seems like MeeGo is at too early a stage in its development to consider, but if Nokia or Intel announced a phone tomorrow that had high-end specs and ran MeeGo natively, well, that'd certainly throw a wrench into my decision.

So. Anybody got opinions? :D

Wednesday, November 17, 2010

The Decline and Fall of the Facebook Empire (part II)

(click here for part I)

Yesterday, I blogged about a lesson that Facebook is soon going to learn. That lesson is this, and comes in two parts: when you integrate your services, you're actually competing with the Internet. And, when you compete with the Internet, you lose.

The Second Thing

So what does it mean to compete with the Internet, and how can you avoid it?

The Internet is really just a consensus built around a set of protocols; it only holds together as well as it does because everybody agrees to either work within the framework of all the protocols that exist, or to build new protocols and try to build consensus around those. When you build a new service that follows existing protocols, you're working with the Internet, and strengthening the whole network, because it makes it easier for people to benefit from your innovations. So, people innovate within the framework of the Internet because the users are there, and users are there because innovation happens there - it's a virtuous cycle, in other words.

(If you've ever heard people talk about "open standards" in reverent, near-religious terms, it's because they've realized just how powerful this virtuous cycle is.)

That's why competing against the Internet is a losing proposition. Its creators built a system where anybody can improve the whole by adding their bit, and by now it's built such a tremendous momentum that you have to be as large as Facebook to make any headway against the flow.

Facebook has sort of a mixed history when it comes to open protocols. The website (their main service) is pretty locked-down; things like RSS feeds exist, but they sure don't want you to find them! On the other hand, Facebook chat is built on XMPP, and as a result it was trivial for most instant messaging clients to add support for it - an example of the power of using open protocols.

It's possible that they'll somehow manage to map their new messaging service onto an existing protocol (IMAP, if we're lucky), but I have my doubts. If they were doing that, it seems like the sort of major feature that they'd want to mention! Far more likely that they'll expect you to use it through their web interface, and maybe have some limited integration with other services.

On the other hand, some companies actually get it. I may have misclassified Google the other day, for instance - they expose a lot of their services through their GData APIs, and tend toward using open standards without restrictions when possible. (For instance, they were one of the first free webmail services to give people access to IMAP and POP for free.)

Yesterday, I blogged about a lesson that Facebook is soon going to learn. That lesson is this, and comes in two parts: when you integrate your services, you're actually competing with the Internet. And, when you compete with the Internet, you lose.

The Second Thing

So what does it mean to compete with the Internet, and how can you avoid it?

The Internet is really just a consensus built around a set of protocols; it only holds together as well as it does because everybody agrees to either work within the framework of all the protocols that exist, or to build new protocols and try to build consensus around those. When you build a new service that follows existing protocols, you're working with the Internet, and strengthening the whole network, because it makes it easier for people to benefit from your innovations. So, people innovate within the framework of the Internet because the users are there, and users are there because innovation happens there - it's a virtuous cycle, in other words.

(If you've ever heard people talk about "open standards" in reverent, near-religious terms, it's because they've realized just how powerful this virtuous cycle is.)

That's why competing against the Internet is a losing proposition. Its creators built a system where anybody can improve the whole by adding their bit, and by now it's built such a tremendous momentum that you have to be as large as Facebook to make any headway against the flow.

Facebook has sort of a mixed history when it comes to open protocols. The website (their main service) is pretty locked-down; things like RSS feeds exist, but they sure don't want you to find them! On the other hand, Facebook chat is built on XMPP, and as a result it was trivial for most instant messaging clients to add support for it - an example of the power of using open protocols.

It's possible that they'll somehow manage to map their new messaging service onto an existing protocol (IMAP, if we're lucky), but I have my doubts. If they were doing that, it seems like the sort of major feature that they'd want to mention! Far more likely that they'll expect you to use it through their web interface, and maybe have some limited integration with other services.

On the other hand, some companies actually get it. I may have misclassified Google the other day, for instance - they expose a lot of their services through their GData APIs, and tend toward using open standards without restrictions when possible. (For instance, they were one of the first free webmail services to give people access to IMAP and POP for free.)

Tuesday, November 16, 2010

The Decline and Fall of the Facebook Empire (part I)

There is a hard lesson that Internet titans learn eventually. AOL was the first to learn it, and it led to their long, drawn-out demise. Yahoo learned it, and is now a shadow of its former self. Microsoft and Google have both come up against it, but been reasonably successful in avoiding it; the former by shoveling money at the problem, and the latter through sheer heroic engineering effort. And, with yesterday's announcement about a unified messaging system, Facebook is about to learn it - and doesn't have the resources to power through anyway, like some others.

That lesson is this, and comes in two parts: when you integrate your services, you're actually competing with the Internet. And, when you compete with the Internet, you lose.

The First Thing

Why does integration equate to competing with the Internet?

Let's say I think this new messaging platform is awesome. IM? Great, all my friends use Facebook chat anyway! SMS? Sounds pretty cool! Email? ...Wait, hold on a second. You mean I have to give up GMail to get all the benefits of this integration? :(

In other words, this kind of integration is a form of soft vendor lock-in: they make it more convenient to use all of their services, rather than using the best of what the 'Net has to offer. AOL is probably the strongest example of this - back in the day, they combined an ISP, web browser, and email service into one package. And, they quickly started hemorrhaging customers, because they couldn't compete with the best ISPs, browsers, and email services all at the same time.

See, savvy Internet users know how to mix and match services. Back in the '90s, AOL wasn't just competing against all the other companies in the same space - it was competing against every possible permutation of an ISP, a web browser, and an email service that people could come up with. And today, Facebook is trying something similar. By combining a bunch of similar services under the umbrella of "messaging", Facebook is competing with every possible permutation of messaging services available online.

And here's the kicker: They're not just competing with every permutation of services that do the things they do. They're also competing with every new service that lets people communicate. They don't have an answer for things like Skype, for instance. And the thing about new services is, there's never a right answer for how to deal with them. The only right answer is not to put yourself in a situation where you're pressured to mimic every new technology that comes along.

I mentioned earlier that the savvy Internet users can often find better combinations of services than any one company can provide. What about the non-savvy users? They stick around, sometimes for years after a service has lost its vitality, and you end up with digital ghettoes like Hotmail and AOL. That's how I see yesterday's announcement - it's Facebook's first step toward becoming yet another digital ghetto.

(continued in part II, tomorrow!)

That lesson is this, and comes in two parts: when you integrate your services, you're actually competing with the Internet. And, when you compete with the Internet, you lose.

The First Thing

Why does integration equate to competing with the Internet?

Let's say I think this new messaging platform is awesome. IM? Great, all my friends use Facebook chat anyway! SMS? Sounds pretty cool! Email? ...Wait, hold on a second. You mean I have to give up GMail to get all the benefits of this integration? :(

In other words, this kind of integration is a form of soft vendor lock-in: they make it more convenient to use all of their services, rather than using the best of what the 'Net has to offer. AOL is probably the strongest example of this - back in the day, they combined an ISP, web browser, and email service into one package. And, they quickly started hemorrhaging customers, because they couldn't compete with the best ISPs, browsers, and email services all at the same time.

See, savvy Internet users know how to mix and match services. Back in the '90s, AOL wasn't just competing against all the other companies in the same space - it was competing against every possible permutation of an ISP, a web browser, and an email service that people could come up with. And today, Facebook is trying something similar. By combining a bunch of similar services under the umbrella of "messaging", Facebook is competing with every possible permutation of messaging services available online.

And here's the kicker: They're not just competing with every permutation of services that do the things they do. They're also competing with every new service that lets people communicate. They don't have an answer for things like Skype, for instance. And the thing about new services is, there's never a right answer for how to deal with them. The only right answer is not to put yourself in a situation where you're pressured to mimic every new technology that comes along.

I mentioned earlier that the savvy Internet users can often find better combinations of services than any one company can provide. What about the non-savvy users? They stick around, sometimes for years after a service has lost its vitality, and you end up with digital ghettoes like Hotmail and AOL. That's how I see yesterday's announcement - it's Facebook's first step toward becoming yet another digital ghetto.

(continued in part II, tomorrow!)

Monday, November 15, 2010

Procrastination

So here's the thing about procrastination: I think I actually enjoy it. For some reason, having something that I need to do, and procrastinating by doing something else, is way more fun than just doing the something else. This worries me.